Loading...

Your team now includes members who never sleep, never take vacation, and never complain about workload. They also never question bad decisions, never push back on unrealistic timelines, and never tell you when your strategy is wrong.

Managing humans and AI agents together is not traditional management with a few bots added. It is a fundamentally different discipline that requires new frameworks, new skills, and new ways of thinking about accountability, quality, and culture.

Most companies are getting this wrong. Here is how to get it right.

The Hybrid Team Reality

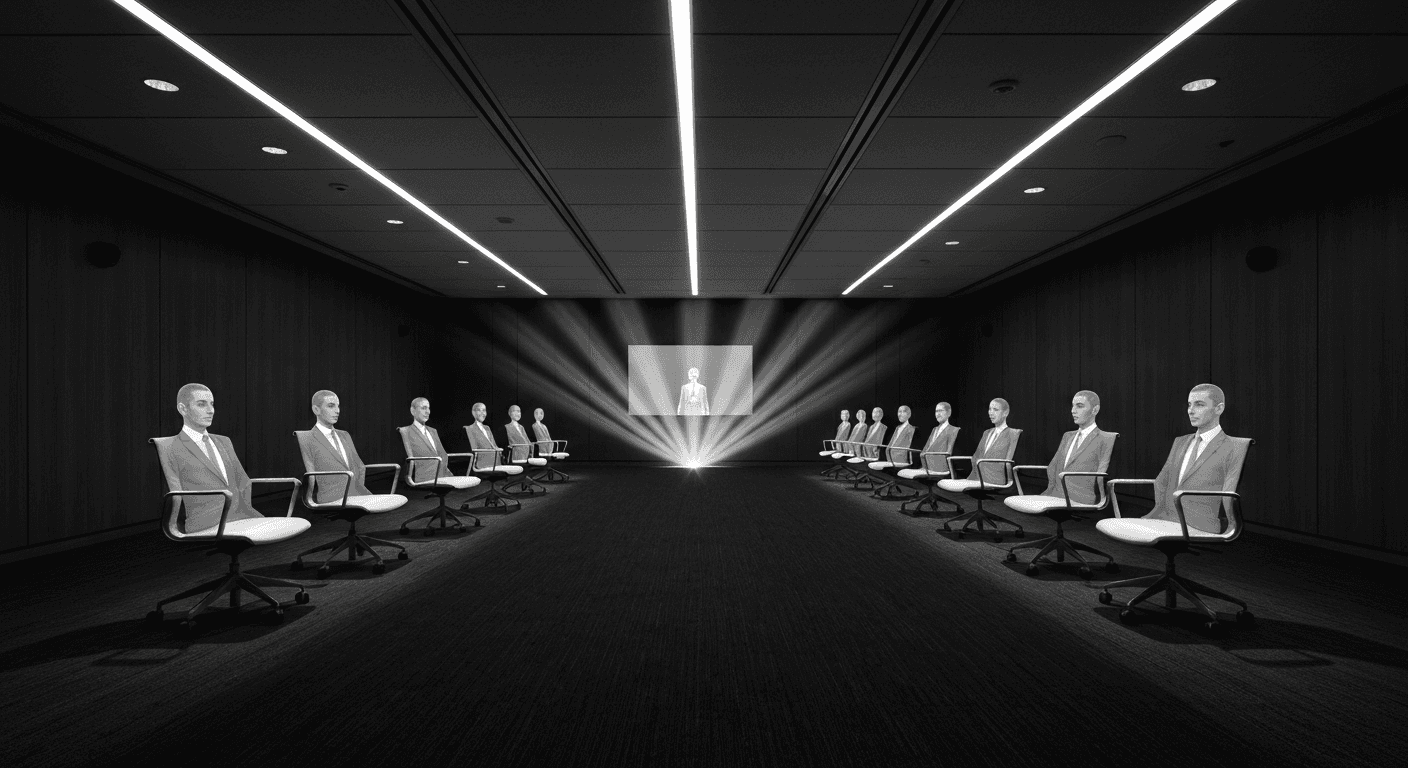

A modern team might look like this. Two human designers who handle creative direction and client communication. Three AI agents who produce design variations, resize assets, and generate mockups. One human project manager who coordinates everything. Two AI agents who handle scheduling, status updates, and documentation.

That is a team of eight. Five of them are AI. And the dynamics are completely different from an all-human team.

The AI agents are incredibly productive. They work around the clock. They do not get distracted. They do not have off days. But they also do not understand context without being told. They do not sense when a project is going off the rails. They do not build relationships with clients. They execute exactly what they are told, no more and no less.

The humans provide what AI cannot. Judgment. Creativity. Relationship building. Strategic thinking. Emotional intelligence. The ability to say "this approach is not going to work" before significant resources are wasted.

The manager's job is to design workflows that leverage each member's strengths. AI handles volume, consistency, and speed. Humans handle nuance, judgment, and adaptation. The magic happens at the interface between the two.

Communication Protocols That Actually Work

In an all-human team, communication is mostly informal. Slack messages, quick calls, hallway conversations. Everyone understands context implicitly. You can say "make it pop" and your designer knows what you mean because they have been working with you for two years.

AI agents do not understand implicit context. They need explicit, structured communication. And here is the problem: when you design communication protocols for AI agents, the human team members also benefit from that structure.

Every task assignment, whether to a human or an AI agent, should include four elements. What is the desired outcome? What are the specific requirements and constraints? What is the quality standard? When is it due?

For human team members, this structure prevents the ambiguity that causes rework. For AI agents, it provides the explicit instructions they need to produce useful output. The same protocol works for both, which simplifies management considerably.

But there is a critical difference. Human team members need to know when they are reviewing AI-generated work versus original human work. This is not about distrust. It is about applying the right evaluation lens. AI-generated code needs different review attention than human-written code. AI-generated copy needs different editorial treatment than human-written copy.

Establish a clear labeling system. AI-generated outputs are marked as such. Human-generated outputs are marked as such. Outputs that are AI-generated and human-refined are marked as hybrid. Everyone knows what they are working with.

Accountability in a World Where AI Does the Work

This is the question that trips up most managers. If an AI agent produces a bug, who is accountable? If an AI agent sends a client the wrong information, whose fault is it?

The answer is simple, even if the implementation is not. The human who configured, deployed, and supervised the AI agent is accountable for its output. Full stop.

Think of it this way. If a manager delegates a task to a junior employee and the output is wrong, the manager shares accountability. They should have provided clearer instructions, reviewed the work, or chosen a different person for the task. The same principle applies to AI agents.

The human who sets up an AI agent is responsible for defining its scope, setting quality standards, reviewing its outputs, and catching errors before they reach the client. If the agent produces bad work, the question is not "why did the AI fail?" The question is "why did the human not catch it?"

This means human team members need time allocated for AI supervision. A common mistake is expecting humans to direct AI agents AND do their own work with no adjustment to their workload. AI supervision is real work. It requires attention, judgment, and time. Budget for it.

Create a review structure that matches the risk level. Low-risk AI outputs (internal documentation, data summaries, routine correspondence) get spot-checked. Medium-risk outputs (client-facing content, code changes, financial calculations) get full human review. High-risk outputs (security-sensitive code, legal documents, strategic recommendations) get expert human review.

Quality Control: Different Standards, Different Methods

Quality control for human work typically involves peer review, mentoring, and periodic evaluation. You trust that experienced professionals will self-correct most issues, and you catch the rest through review processes.

Quality control for AI work requires systematic validation. Not because AI is less capable, but because AI errors are different from human errors. Humans make mistakes from carelessness, fatigue, or misunderstanding. AI makes mistakes from missing context, edge cases, and training limitations.

Automated validation catches many AI errors before human review. Syntax checking for code. Fact verification for content. Format validation for data processing. Brand voice scoring for marketing copy. These automated checks filter out obvious issues and let human reviewers focus on subtle quality concerns.

A/B testing works brilliantly for AI quality improvement. Have your AI agent generate two versions of everything. Let humans evaluate which is better and why. Feed that evaluation back into the agent's instructions. Over time, the agent's default output quality rises to match your standards.

Track quality metrics separately for human and AI work. Not to compare them, but to identify where each needs improvement. If AI-generated code has more bugs than human-written code, the AI instructions need refinement. If human-written copy takes longer to produce than AI-generated copy without significantly higher quality, the humans might benefit from using AI as a starting point.

Culture in a Hybrid Team

This is the part most management articles ignore, and it is the part that matters most.

Human team members have legitimate concerns about working alongside AI agents. Will AI replace their job? Is their work being devalued? Are they being compared to machines? These concerns, if unaddressed, erode morale, reduce engagement, and drive attrition.

Address them directly. Be transparent about which tasks are being automated and why. Explain how AI changes their role rather than eliminating it. The designer is not replaced by AI. The designer is elevated from producing 20 variations manually to directing an AI that produces 200 variations while the designer focuses on creative strategy and client relationships.

Celebrate human contributions explicitly. In a world where AI handles the routine, human judgment, creativity, and relationship skills become more valuable, not less. Make sure your team knows that. Recognize the decisions they make, the problems they solve, and the relationships they build.

Create space for humans to learn AI skills. The team members who embrace AI tools become more valuable, not less. Provide training, time for experimentation, and recognition for innovative AI applications. Make AI fluency a career development opportunity, not a threat.

And do not dehumanize the human side of your team to match the efficiency of the AI side. AI agents do not need breaks, social time, or emotional support. Humans do. Do not let the always-on nature of AI agents create unrealistic expectations for human availability and productivity.

The Management Operating System

Build a management rhythm that accounts for both human and AI team members.

Daily standups include both. Human team members share what they are working on. AI agent dashboards show what the agents completed overnight. Everyone sees the full picture.

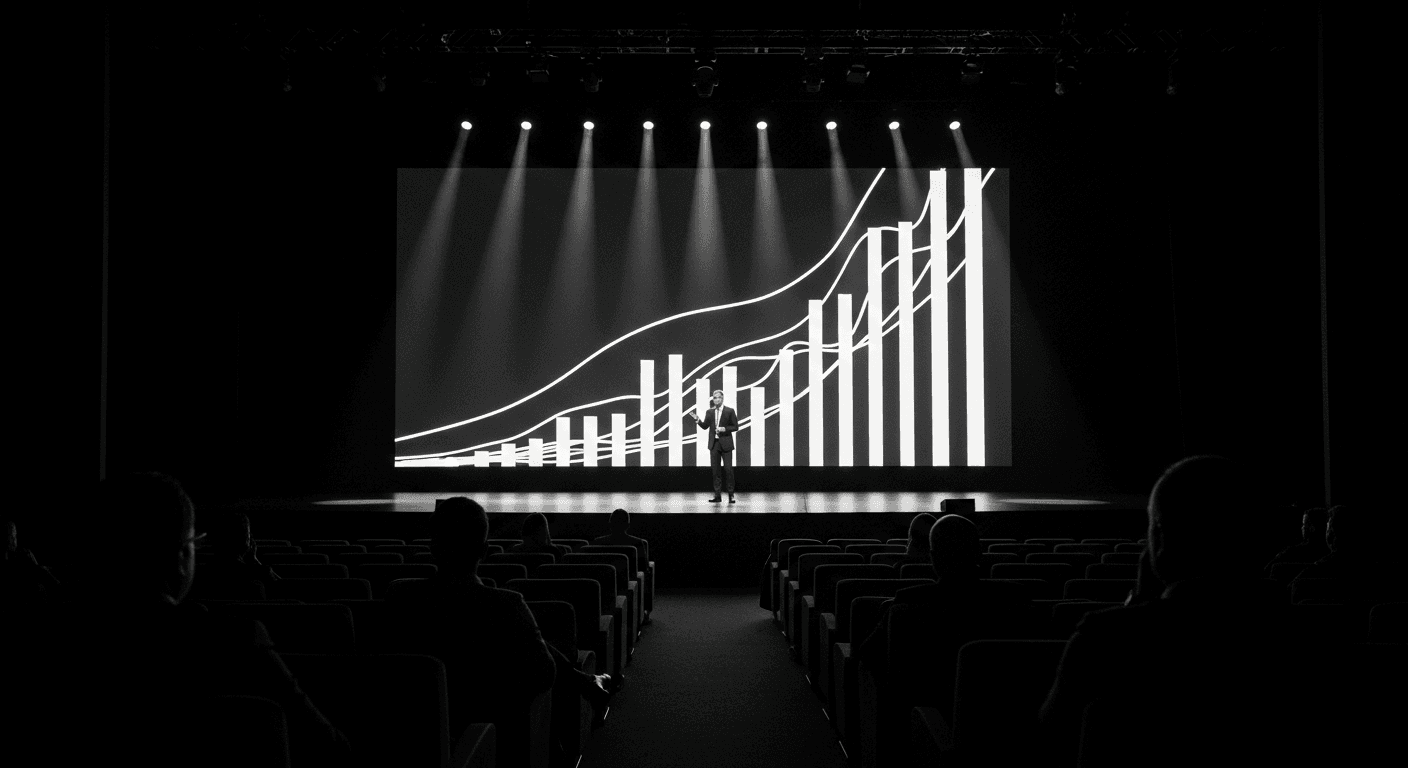

Weekly reviews evaluate both human performance and AI performance. Are the agents producing the expected quality? Are the human review processes catching issues? Are the workflows efficient?

Monthly retrospectives focus on the interface between human and AI work. Where are the handoff points causing friction? Which AI agents need better instructions? Which human team members need more AI training?

Quarterly strategic reviews ask bigger questions. Should we automate more tasks? Should we hire more humans for growing areas? Is our human-AI ratio optimal?

The companies that master hybrid team management will have a decisive advantage. They will move faster than all-human teams and produce higher quality than all-AI operations. The management skill of orchestrating humans and AI together is becoming the most valuable leadership capability of the decade.

Start building that capability now. Your competitors already are.

Related Articles

Replacing Teams with AI: The Complete Playbook

A practical guide to replacing traditional team roles with AI agents — which roles to automate first, how to manage the transition, and expected ROI.

Building an AI Agency from Scratch: Year One Blueprint

The complete playbook for starting an AI agency — from finding your first client to building repeatable delivery processes and scaling revenue.

Fundraising with AI: From Pitch Deck to Due Diligence

Use AI agents to prepare fundraising materials, research investors, practice pitches, and streamline due diligence for faster closes.

Want to Implement This?

Stop reading about AI and start building with it. Book a free discovery call and see how AI agents can accelerate your business.