Loading...

Picking the wrong model is the most expensive mistake nobody talks about.

Not because the API bill is catastrophic. Because you build your entire product around a model's strengths, ship it, and then discover that a different model would have been 3x cheaper at equal quality. Or that a smaller model handles 80% of your traffic without breaking a sweat.

We have routed millions of requests across multiple model providers. Here is what we actually learned about choosing models in 2026. Not the marketing. The reality.

Claude: The Thinking Model

Claude is the model you reach for when the task requires genuine reasoning.

Long-context analysis is where Claude separates itself. Feed it a 200-page contract and ask it to identify conflicting clauses. Feed it an entire codebase and ask it to trace a bug across multiple files. Feed it a research paper and ask it to evaluate the methodology. This is where Claude operates at a level that genuinely impresses.

Instruction following is Claude's other superpower. Complex, multi-step instructions with conditional logic, specific formatting requirements, and edge case handling. Claude follows them. Other models interpret them. There is a meaningful difference when you are building agent workflows that chain multiple operations.

The trade-off is cost and sometimes speed. Claude is not the cheapest option for simple tasks. Using Claude to classify sentiment or extract email addresses is like hiring a surgeon to put on a band-aid. Technically correct. Absurdly wasteful.

Code generation deserves special mention. Claude produces code that works on the first try more consistently than anything else we have tested. For complex functions with nuanced requirements, the gap is significant. For simple CRUD operations, the gap closes.

GPT: The Generalist

GPT models are the Swiss Army knife. Good at everything. Best at nothing in particular.

Speed is where GPT often wins. For applications where latency matters more than depth of reasoning, GPT delivers. Real-time chat interfaces, autocomplete suggestions, quick classifications. The response comes fast and it is good enough.

The ecosystem around GPT is mature. More tutorials, more examples, more integrations. If you are building something conventional, GPT probably has a reference implementation somewhere. That matters more than benchmarks when you are trying to ship quickly.

Multimodal capabilities have improved dramatically. Image understanding, audio processing, structured output generation. GPT handles diverse input types with consistent quality.

The caution: "good at everything" means you sometimes miss that a specialized model would be dramatically better for your specific use case. We have seen teams use GPT for tasks where Claude outperforms by 30% on quality metrics simply because GPT was the default choice nobody questioned.

Open Source: The Economic Play

Llama, Mistral, Qwen. The open-source ecosystem in 2026 is legitimately impressive.

Self-hosted models eliminate per-token costs entirely. For high-volume, routine tasks, the math is overwhelming. If you process a million classification requests per day, the difference between $0.001 per request and $0 per request is $30,000 per month. That funds a lot of GPU infrastructure.

Customization is the other major advantage. Fine-tune on your specific data. Add domain knowledge. Optimize for your exact use case. You cannot fine-tune Claude or GPT with the same level of control.

But the honest trade-off: open-source models require infrastructure expertise. Model hosting, scaling, monitoring, updating. These are not trivial operational burdens. You need people who understand GPU allocation, model quantization, and inference optimization. If you do not have those people, the "free" model costs more than the paid API.

For quality on complex reasoning tasks, frontier closed models still lead. The gap is narrowing. But it exists. Pretending otherwise wastes time and user experience.

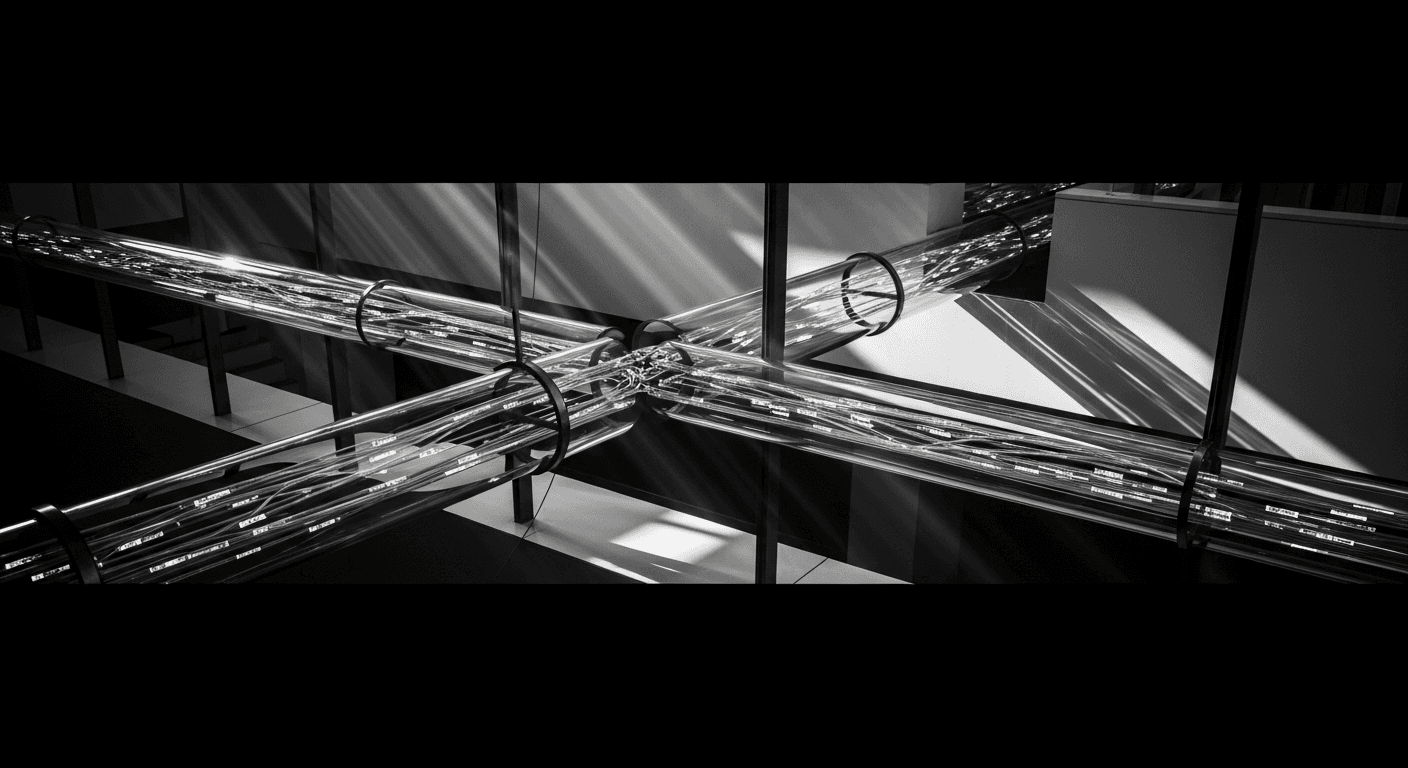

The Multi-Model Strategy

The winning approach is obvious once you see it. Stop treating model selection as a singular decision.

Build a routing layer. Classify incoming requests by complexity, required quality, and latency tolerance. Route accordingly.

Simple classification, extraction, formatting? Open-source model. Fast, cheap, good enough.

General-purpose generation, summarization, standard Q&A? GPT. Fast, reliable, well-integrated.

Complex reasoning, code generation, long-context analysis, agent workflows? Claude. Higher cost, higher quality when it matters.

This tiered approach typically reduces costs by 40-60% compared to routing everything through a single frontier model. Quality stays equal or improves because each request hits the model best suited for it.

The implementation is not complicated. A lightweight classifier determines task complexity. A routing function maps complexity to model. A fallback mechanism escalates to a more capable model if the initial response fails quality checks.

Practical Selection Framework

When evaluating models for a new use case, run this process.

Step one: define your quality bar with specific, measurable criteria. Not "good responses." Things like "correctly identifies all entities in the document with 95%+ accuracy."

Step two: test all candidate models on 100 representative examples. Score each against your criteria. This takes a day. It saves weeks of regret.

Step three: calculate total cost of ownership. API costs per request multiplied by projected volume. Plus engineering time for integration. Plus infrastructure costs for self-hosted models. Plus ongoing maintenance.

Step four: pick the model that meets your quality bar at the lowest total cost. Not the model with the highest benchmark scores. Not the trendiest model. The one that solves your specific problem economically.

Step five: build with abstraction. Use a model-agnostic interface so you can switch providers without rewriting your application. The model landscape changes quarterly. Your architecture should accommodate that.

The model you pick today might not be the model you use in six months. Design for that reality.

Related Articles

Fine-Tuning vs RAG: Making the Right Choice for Your AI Application

When to fine-tune models versus using RAG for domain-specific AI — cost comparison, quality analysis, and decision framework.

Prompt Engineering Masterclass: From Basics to Advanced Techniques

Master prompt engineering — system prompts, few-shot learning, chain-of-thought reasoning, and advanced techniques for reliable AI outputs.

WebSocket Patterns for Real-Time AI Applications

Implement WebSocket communication for AI applications — streaming responses, live collaboration, and real-time data synchronization patterns.

Want to Implement This?

Stop reading about AI and start building with it. Book a free discovery call and see how AI agents can accelerate your business.