Loading...

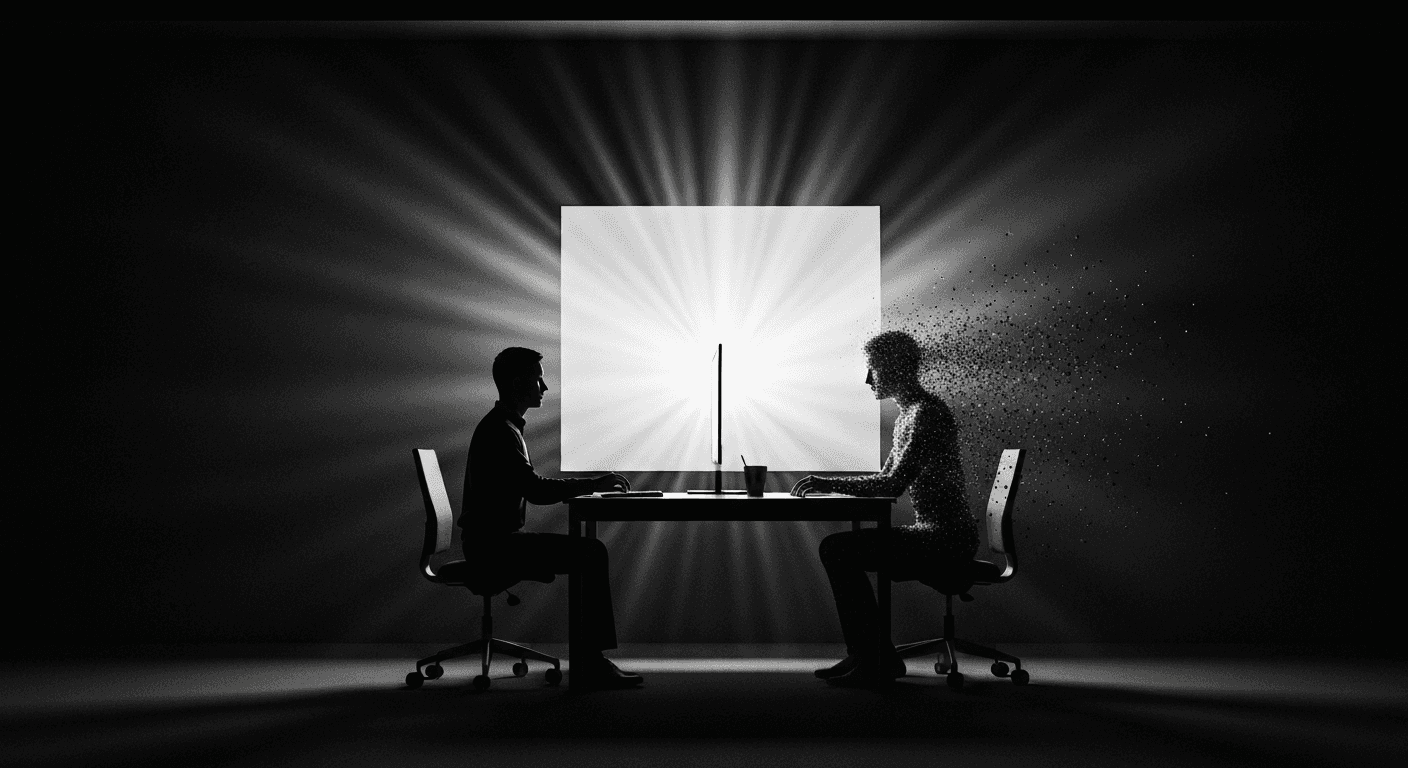

Autonomous coding agents are the most significant shift in software development since we stopped writing assembly by hand. I realize that sounds hyperbolic. It is not.

These agents do not autocomplete your code. They plan architectures, implement features, write tests, fix bugs, and deploy applications. You give them a goal. They figure out the path.

I have been building with autonomous agents for the past year. Here is everything I know.

How They Actually Work

Under the hood, an autonomous coding agent is a loop with five components.

A planning module that breaks your high-level request into executable subtasks. "Build a user authentication system" becomes: create database schema, implement auth endpoints, add middleware, write tests, configure session management. The agent decomposes the problem before writing a single line of code.

A code generation engine that implements each subtask. This is the part most people think about when they hear "AI coding." But generation is maybe 30% of the agent's job.

A testing and validation layer that checks every piece of generated code against the project's quality standards. Does it compile? Do the types check? Do the tests pass? Does it follow the project conventions defined in CLAUDE.md?

An error recovery system that handles failures. When a test fails, the agent reads the error, diagnoses the problem, fixes the code, and reruns the tests. This loop continues until everything passes or the agent determines it needs human input.

A memory module that maintains context across sessions. The agent remembers what it built yesterday, what decisions were made, and what patterns the project follows. Without memory, every session starts from scratch.

The loop-based approach is what separates true autonomous agents from fancy autocomplete. The agent is not predicting the next token. It is solving a problem through iterative refinement.

The Reliability Problem (And How to Solve It)

Here is the uncomfortable truth: autonomous agents are not 100% reliable. Neither are human developers, but we have spent decades building systems around human fallibility. We need similar guardrails for AI.

Type checking is the first line of defense. TypeScript strict mode catches a huge category of agent mistakes at compile time. If the agent generates code that does not type-check, it gets rejected before it touches the codebase.

Automated tests are the second line. Every feature the agent builds should have corresponding tests. The agent runs these tests itself and does not report success until they pass. This self-verification is the single most important reliability mechanism.

Build verification is the third line. The entire project must compile and build successfully after every change. If the agent introduces a build-breaking change, it detects and fixes it immediately.

Human review checkpoints exist for critical decisions. Architecture changes, security-sensitive code, and data migration scripts all go through human review. The agent flags these automatically.

This layered approach means the agent can operate autonomously on routine tasks while escalating the important stuff. The goal is not zero human involvement. It is optimal human involvement.

The Economics Are Insane

One developer paired with autonomous coding agents produces the output of a five-person team. I have measured this across multiple projects. The ratio is real.

The agent handles: boilerplate code, testing, documentation, routine bug fixes, code reviews, dependency updates, and deployment configuration. That is easily 70-80% of what a traditional development team spends time on.

The developer handles: architecture, product decisions, complex problem-solving, user experience design, and final quality review. These are the highest-leverage activities, and now a single person can focus on them full-time instead of splitting attention with execution work.

The cost difference is dramatic. A five-person team costs $500K-1M per year in salary alone. One developer plus agent infrastructure costs a fraction of that and produces comparable output. For startups and solo founders, this changes the math on what is possible to build.

Adoption Is Accelerating

The tools crossed a maturity threshold in late 2025. Before that, autonomous agents were impressive demos that fell apart on real projects. Now they handle production workloads.

Modern agents understand project context. They read your CLAUDE.md, navigate your codebase, and follow your conventions. They produce code that passes linting, type checking, and test suites on the first attempt in most cases.

The remaining edge cases are handled by the error recovery loop. When the agent produces something that does not pass validation, it reads the error, fixes the issue, and tries again. This self-correction capability is what makes the difference between a toy and a tool.

When Not to Use Them

Autonomous agents are not the right choice for everything.

Novel algorithm design. If you are implementing something truly new, where the right approach is uncertain, you need human creativity and intuition. Agents excel at implementing known patterns, not inventing new ones.

Highly ambiguous requirements. "Make the app feel better" is not a task an agent can execute. Clear, specific requirements produce dramatically better results than vague ones.

Critical security code. Cryptographic implementations, authentication protocols, and payment processing logic should always have human review. Agents can implement these features, but a human must verify the security properties.

For everything else, autonomous agents are the fastest and most reliable way to build software today.

Getting Started

Do not start with a greenfield project. Take an existing codebase with good test coverage and try using an autonomous agent for a medium-complexity feature. Something that would normally take you a day or two.

Write a thorough CLAUDE.md file that describes the project's architecture, conventions, and quality standards. Give the agent the feature specification. Let it work.

Review the output carefully. Correct any patterns you do not like by updating the CLAUDE.md. Run the agent again on a similar task and notice how the output quality improves.

Within a few iterations, you will have calibrated the agent to your project's standards. From there, the productivity gains compound fast.

Related Articles

AI-Powered Development Workflows: How We Ship 10x Faster in 2026

Modern AI development workflows combine autonomous agents, intelligent code review, and automated testing to deliver production software at unprecedented speed.

AI Pair Programming: Moving Beyond Copilot to Full Autonomy

The evolution from code completion to autonomous pair programming, and how modern AI agents collaborate with developers on complex tasks.

AI Project Management: How Autonomous Agents Manage Themselves

Inside the self-organizing AI development process where agents plan sprints, assign tasks, track progress, and adapt to changing requirements without a human project manager.

Want to Implement This?

Stop reading about AI and start building with it. Book a free discovery call and see how AI agents can accelerate your business.