Loading...

Copilot was training wheels. Useful training wheels. But training wheels nonetheless.

I remember the first time GitHub Copilot suggested a complete function body and I thought "okay, this changes things." That feeling was correct, but not for the reason I imagined. Copilot did not change things by completing my code. It changed things by proving that AI could understand programming context well enough to be useful.

The next generation went much further.

The Context Gap

Here is the fundamental difference between code completion and AI pair programming: scope.

Code completion operates at the line or function level. It sees the current file, maybe some imports, and predicts what you probably want to type next. It is fast, convenient, and shallow.

AI pair programming operates at the project level. It understands how your components interact. It knows where technical debt is accumulating. It can tell you that the feature you are about to build will conflict with something in a module you have not opened in weeks.

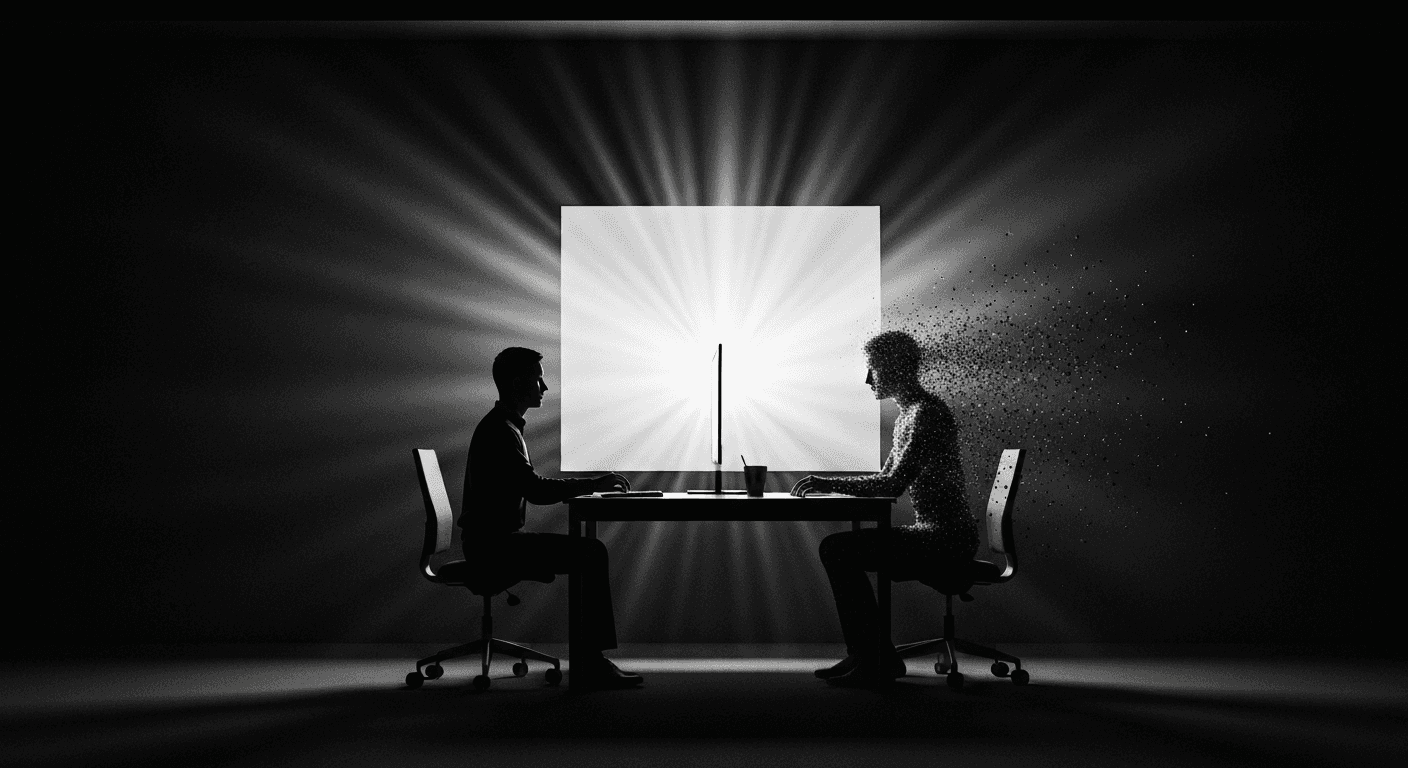

This is the difference between a tool that finishes your sentences and a colleague who understands your codebase.

What a Session Actually Looks Like

Forget the autocomplete paradigm. AI pair programming is a conversation.

I describe what I want to build at a high level. "We need a notification system that supports email, in-app, and push notifications with user preference management."

The AI proposes an implementation plan. Database schema for notification preferences. Service layer for notification dispatching. API endpoints for preference management. UI components for the settings page. Integration with existing email and push providers.

We refine the plan together. "The email integration should use Resend, not SendGrid. And notification preferences should be per-channel, not global." The AI adjusts the plan.

Then the AI executes. It builds the schema, implements the services, creates the API routes, generates the UI components, writes the tests. I review as it goes, catching architectural issues early and confirming it is on track.

This collaborative loop is faster than either pure manual development or fully autonomous generation. The human provides judgment and direction. The AI provides speed and thoroughness. Together, they are more effective than either alone.

The Senior Developer Multiplier

The productivity gains scale with developer experience. This surprised me initially but makes perfect sense in hindsight.

A junior developer benefits from AI pair programming, but they spend significant time understanding the AI's suggestions, evaluating whether the approach is sound, and learning from the patterns the AI uses. Valuable, but the speed multiplier is modest.

A senior developer who knows exactly what they want can direct the AI like a conductor leading an orchestra. They spot architectural issues in the AI's proposals immediately. They know which design patterns fit the situation. They can evaluate code quality at a glance. The AI becomes an extension of their expertise rather than a replacement for it.

I have seen senior developers ship in hours what previously took days. Not because the AI is smarter than them, but because it executes their vision at machine speed while they stay focused on the design-level decisions that matter most.

Conversations Beat Commands

The most effective AI pair programming sessions feel like working with a thoughtful colleague. You discuss trade-offs. You ask "why did you choose this approach?" You suggest alternatives and the AI explains the implications.

Bad AI pair programming looks like issuing commands. "Write function X. Now write function Y. Now fix the bug." This misses the entire point. You are not dictating. You are collaborating.

When I approach sessions as conversations, the output quality is dramatically higher. The AI surfaces considerations I had not thought about. It proposes optimizations I would not have implemented. It catches edge cases I would have missed.

The Skill Shift

The skill that matters most in AI pair programming is not typing speed or framework knowledge. It is the ability to communicate intent clearly and evaluate solutions quickly.

Developers who can articulate what they want at the right level of abstraction, neither too vague nor too prescriptive, get the best results. "Build a caching layer" is too vague. "Implement a Redis-backed LRU cache with TTL support for API responses, with cache invalidation on data mutations" is the right level.

The developers who will thrive are the ones who invest in design thinking, system architecture, and communication skills. The mechanical coding skills that used to differentiate developers are becoming less valuable every month.

This is not a loss. It is a promotion.

The Setup That Works

If you want to try AI pair programming today, here is my actual setup:

Claude Code with a thorough CLAUDE.md file that describes my project architecture, coding conventions, and quality standards. A clean, well-organized codebase with files under 500 lines. TypeScript strict mode. Comprehensive type definitions. A test suite that runs in under a minute.

Start with a medium-complexity feature. Something you could build yourself in a day. Describe it to the AI at a medium level of abstraction. Review everything it produces. Correct patterns early.

Within three sessions, you will feel the shift. You stop thinking about implementation and start thinking about design. And that is where the real value lives.

Related Articles

Autonomous Coding Agents: The Complete Guide for 2026

Everything you need to know about autonomous coding agents — how they work, when to use them, and how to build reliable systems around them.

Code Review with AI: A Comprehensive Approach to Quality

How AI-powered code review catches bugs, enforces standards, and improves code quality beyond what manual review alone can achieve.

AI Project Management: How Autonomous Agents Manage Themselves

Inside the self-organizing AI development process where agents plan sprints, assign tasks, track progress, and adapt to changing requirements without a human project manager.

Want to Implement This?

Stop reading about AI and start building with it. Book a free discovery call and see how AI agents can accelerate your business.