Loading...

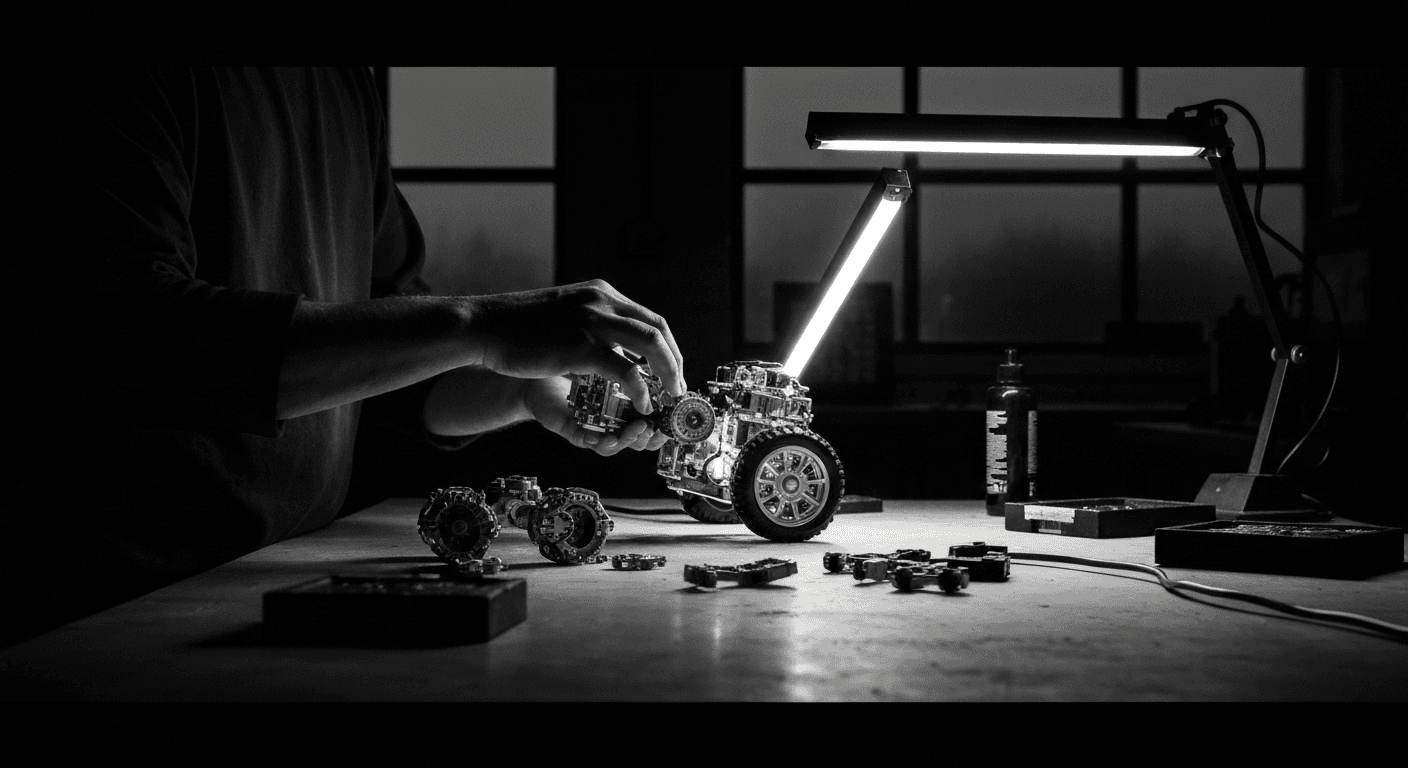

I spent three months building an AI agent that was genuinely useful. Smart prompts. Good tool integrations. Solid error handling. Users loved it for about five minutes.

Then they asked it something they had already told it. And it stared back blankly. Every. Single. Time.

That agent was a goldfish with a PhD. Brilliant in the moment, completely incapable of building on anything. And that is the exact problem memory solves.

Stateless Is a Dead End

Most agents people build today are stateless. Each conversation starts from absolute zero. The user explains their context, their preferences, their situation. Again. The agent processes it, delivers something useful, and then forgets everything the moment the session ends.

This works for toy demos. It falls apart for anything real.

Think about the best human assistant you have ever worked with. What made them great was not raw intelligence. It was that they remembered. They knew your preferences. They recalled previous decisions and why you made them. They built a mental model of you over time.

That is what memory gives an AI agent. Not just recall, but compounding usefulness.

Short-Term Memory: The Conversation Buffer

Short-term memory is the simplest form. It is the conversation context, the messages exchanged in the current session. Every agent has this by default because it is just the context window.

But managing it well is harder than it sounds. Context windows have limits. When conversations get long, you need a strategy. Naive truncation (dropping the oldest messages) throws away potentially critical information. A user might have stated something important at the start that is still relevant 50 messages later.

Better approaches include rolling summarization, where older messages get compressed into summaries that preserve key facts. Priority tagging, where certain messages are marked as "always include" regardless of window pressure. And selective retrieval, where only the messages relevant to the current turn get pulled into context.

The goal is not to remember everything. It is to remember the right things at the right time.

Long-Term Memory: Where Things Get Interesting

Long-term memory is where agents go from useful to indispensable. This means persisting information across sessions. When a user comes back days or weeks later, the agent already knows their context.

The standard approach uses vector databases. Chroma, Pinecone, Weaviate, Qdrant. You take the information worth remembering, convert it into vector embeddings, and store it. When the agent needs to recall something, it embeds the current query and searches for semantically similar memories.

But here is what most tutorials skip. Dumping raw conversation history into a vector store produces terrible results. The retrieval is noisy. Irrelevant memories get pulled in. Important ones get buried.

You need structure.

Designing a Memory Architecture

Organize memories by type. This is not optional. It is the difference between a useful memory system and a junk drawer.

Factual memories store things the user has told you. Their name, role, preferences, constraints. These are high-confidence, rarely change, and should be retrievable by direct lookup.

Decision memories capture choices made and their rationale. "We chose Postgres over MongoDB because the data is highly relational." These are gold for maintaining consistency across sessions.

Outcome memories record what happened when the agent took action. Did the code compile? Did the user like the output? Did the strategy work? These enable the agent to learn from experience.

Preference memories track patterns in user behavior. They prefer concise responses. They always want code examples. They get frustrated by long explanations. These are inferred, not stated, which means lower confidence but high impact.

Tag every memory with metadata. Timestamps, confidence levels, source (user-stated vs. inferred), category, and relevance scope. This metadata is what makes retrieval precise instead of noisy.

The Memory Management Problem Nobody Talks About

Memory systems degrade over time. It is inevitable. Here is why.

Information becomes stale. A user's tech stack changes. Their preferences evolve. Decisions get reversed. If your memory system treats a six-month-old preference with the same weight as one stated yesterday, it will produce increasingly wrong results.

Implement decay functions. Memories that are not accessed lose relevance over time. Memories that are frequently retrieved gain permanence. Memories that are explicitly contradicted by newer information get deprecated. This is not deletion. It is relevance weighting.

Conflicting memories are the second problem. User says "I prefer TypeScript" in January and "We are switching to Go" in March. Both memories exist. Without conflict resolution, the agent might reference either one depending on which scores higher in vector similarity. Build explicit conflict detection that flags contradictions and resolves them based on recency, specificity, or by asking the user.

Retrieval Strategies That Actually Work

Vector similarity search is necessary but not sufficient. It finds semantically similar content, but semantically similar is not always relevant.

Combine vector search with metadata filtering. When the agent is working on a coding task, filter memories to the "technical decisions" category before running similarity search. When discussing project strategy, filter to "decisions" and "outcomes."

Use recency bias. Recent memories should be weighted higher unless the query is explicitly about historical context. A simple time-decay multiplier on similarity scores handles this.

Consider memory hierarchies. Not every memory needs to be in the vector store. Keep the most recent and most accessed memories in a fast cache. Store the full corpus in the vector database. Archive truly old memories to cold storage. This mirrors how human memory works, and it works for the same reasons.

Building Memory Into Your Agent Loop

The agent loop with memory looks like this. User sends a message. Agent retrieves relevant memories. Agent processes the message with memories as additional context. Agent generates a response. Agent decides what from this interaction is worth remembering. New memories get stored.

That fifth step is the one most implementations skip. They store everything or nothing. Neither works. Build a memory extraction step that identifies facts, decisions, preferences, and outcomes worth persisting. Use the LLM itself for this. It is remarkably good at identifying what is important in a conversation.

The result is an agent that gets better over time. Not because it is retrained. Not because someone manually updates its knowledge base. Because it accumulates relevant context through natural interaction.

That is the difference between a tool you use and an assistant you rely on. Memory is what makes the transition possible.

Related Articles

Building Custom AI Agents from Scratch: A Practical Guide

Step-by-step guide to designing, building, and deploying custom AI agents tailored to your specific business needs and workflows.

RAG for AI Agents: Grounding Decisions in Real Data

Implement Retrieval-Augmented Generation to give your AI agents access to current, accurate information beyond their training data.

The Future of AI Agents: What Comes After 2026

Where AI agent technology is heading — from persistent agents to multi-modal systems, agent economies, and the emergence of AI-native organizations.

Want to Implement This?

Stop reading about AI and start building with it. Book a free discovery call and see how AI agents can accelerate your business.